The most common mistake made in test system development is to focus on the hardware and software while ignoring infrastructure issues such as data storage, deployment, and scalability. Often, problems do not surface until the system has been deployed in production and at that point, fixing them can be very costly and time consuming. For this reason, we will focus on optimizing the production test process.

To ensure seamless test system development and deployment, you’ll need to consider the four best practices below during production test:

1. Decrease Overall Test Cost

Cost is a key factor in the test system development process, especially for a high-volume application. Because production testing is usually an inspection process that adds no material value, test cost is highly scrutinized. The following can help teams reduce overall test cost, particularly in the production testing phase:

- Reduce test system development cycle time by using a systematic approach to test development and improvement, using programming tools that are focused on test and measurement, reusing as much code as possible, and consulting with experts to inform your design.

- Increase test throughput by testing several UUTs in parallel with multi-UUT test execution systems, creating multi-threaded test code to run tasks in parallel, and using state-caching instrument drivers to reduce configuration time.

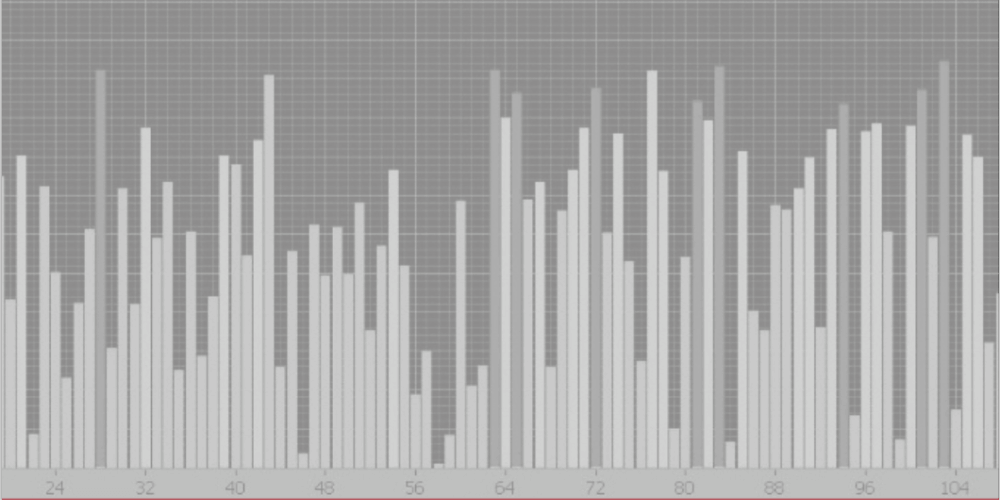

- Reduce test maintenance cost by using remote configuration, monitoring, and analysis interfaces; automating alarm notification systems to decrease system failure response time; and implementing monitoring tools to track and visualize system performance changes early – before major repairs are needed.

2. Improve Test Integrity

The second major challenge in production testing is test integrity. Production testing is critical because it is usually the last chance to catch bad product before it reaches a customer. To ensure your systems is producing data you can trust, consider the following:

- Continually improve testing effectiveness by feeding data from the test execution process back into the test development process to create a test development model for improvement, using a formal change review process when releasing new code or making changes to existing code, and implementing coding standards and style guides to define best practices for your organization.

- Reduce false failures and false passes by defining a standard for how software errors should be handled, upholding that new standard, and using that standard throughout the code – introducing “known-good” and “known-bad” UUTs into the test system periodically to check test performance, and tracking common failure modes for the test system.

- Improve measurement capability by performing repeatability and reproducibility tests on equipment and software and reusing and customizing proven tests for products with similar designs.

- Track all changes by using a trusted software change management application and monitoring all alterations to system configurations including software assignments, calibration data, and equipment serial numbers.

3. Take a System Approach to Testing

In many cases, production test development efforts focus specifically on developing test algorithms and interfacing with test instruments. Test engineers tend to optimize software and hardware closest to the test station. However, to fit production testing into an overall successful comprehensive test system architecture and design approach, you must view the test process as a whole and consider all activities involved in creating and supporting product tests, including:

- Information Management – how data is produced, stored, and consumed – is critical to a comprehensive test system. The purpose of information management is to release tests and test system components and make them available for deployment to a test station or series of test stations on the production floor. Data management must take into consideration inputs and outputs and their related source code, an integrated development environment to implement test modules and plug into a development environment, a test sequence editor, and a software change management tool.

- Test System Administration encompasses the steps necessary to deploy tests to the test stations, and likewise must take into consideration inputs and outputs, a local configuration interface, and a remote configuration interface.

- Test Execution uses the components created in the test implementation process and the data configured in the test administration process to generate results. The execution system has direct impact on test cycle time and is most powerful and flexible if built on modular components with well-defined interfaces and behaviors, including inputs and outputs, a sequencing engine, a data abstraction module, a process module, a test sequence, functional test modules, instrument abstraction modules, and instrument drivers.

- Test Process Control and Data Analysis helps to evaluate data for ongoing production tests and to feedback information for future test design and implementation so that test efficiency and effectiveness are continually improved. This includes inputs and outputs, a real-time remote monitoring and control interface, and a data reduction and analysis interface.

4. Tailor the Architecture to the Organization

Each organization has test system requirements that are specific to its products, quality standards, information systems, and manufacturing strategy. Because of these variations, there is no off-the-shelf, one-size-fits-all solution. The final system design is driven by many factors such as development cost, organization size, quantity of test stations and factories, number of product variations, extent of factory automation, and many others. In each area of the test development model, several solutions can be created to meet the requirements in the following processes:

- Test Design and Implementation includes creating and editing test tools, which requires software that can be kept standard across all applications regardless of size. In the meantime, test sequence editing and change management systems can vary greatly based on application size – small-scale systems can use a periodic file backup, while large systems need custom tools to automate release and deployment of test software.

- Test System Administration can involve a simple set of editable text files to store configuration data like calibration valves, station options, and product information when working just a few test stations. For more than a few test stations, it is ideal to implement a custom configuration database and editing interface.

- Test Execution can vary from a completely manual process to a fully automated one. Each company must consider the tradeoffs with either approach. One critical consideration is that in a fully automated process, error handling must run perfectly.

- Test Process Control and Data Acquisition may be achieved easily by periodically collecting test data and charting it in a spreadsheet or third-party statistics package such as Applied Statistics. However, with minimal effort, a developer can implement a customized process control interface to execute remotely from the test station and collect test results as they are generated.

A test architecture that can be rapidly developed to not only meet current requirements, but be easily scaled as more test stations and higher throughput are needed, is a critical asset to a company. For a full and detailed understanding of the framework for designing a test and measurement system for use in a production environment, read the complete white paper.